Mathematics and the Real World (48 page)

Read Mathematics and the Real World Online

Authors: Zvi Artstein

N

2

, or 8

N

10

, or even 120

N

100

,

we say that the rate of increase is

polynomial

, that is, an expression that is a power of

N

. If the expression giving the number of steps required is of the form

2

N

, or 120

4

N

, or even 10

4000

N

,

the rate of increase is

exponential

. The names reflect how the parameter appears in the expression.

Despite the fact that for a given algorithm there is a difference between a rate of

N

2

and

N

3

, computational complexity theory experts put them together in the polynomial rate category, and similarly with the exponential rate. The class of problems that can be solved by algorithms that reach the result of the calculation at a polynomial rate is called class P.

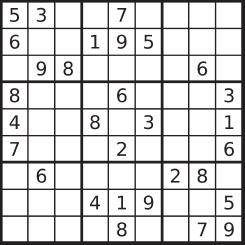

Here there is another interesting distinction, as follows. In many cases it is far easier to check whether a proposed solution to a problem is correct than to find the solution itself. For example, in sudoku there is a 9 × 9 square with numbers between 1 and 9 appearing in some of the smaller squares. The object is to complete the entire grid such that every row and every column and every 3 × 3 sub-square of the nine that make up the whole table contains all the digits from 1 to 9.

Image from Wikimedia Commons/Tim Stellmach

.

The objective of finding the solution, that is, filling all the empty boxes so that each row, column, and so on contains all the digits from 1 to 9, is likely to be difficult and to require many attempts. Yet when someone suggests a solution, it is a simple matter to check whether it is correct. Here too the computability experts are not interested in the number of steps needed to discover whether the proposed solution to a 9 × 9 sudoku is correct or not. They are interested in the number of steps required to check a sudoku whose size keeps increasing, in other words, sudoku of size

N

×

N

, when

N

keeps rising, and in the rate at which the number of steps increases. (A sudoku in which arbitrary

N

numbers have to be arranged still uses an input with a finite number of symbols, as every natural number can be represented, for example, as a decimal number that uses only ten symbols.)

We quoted sudoku purely as an example. The difference between finding a solution and checking a proposed solution arises in many algorithmic tasks; we have already mentioned (in section 36) the public competitions for solving equations. To solve equations is hard. To check if a solution is correct is, in most cases, easy. Also, in checking solutions, a distinction can be drawn between a polynomial rate and an exponential rate. The class of problems for which proposed solutions can be checked using algorithms that suffice with polynomial rates of increase in the number of steps required is called NP (the letter N is derived from the term

non-deterministic

, as the check can be performed simultaneously on all the proposed solutions).

Now here is a chance to win a million dollars. The experts in computability theory do not know if the two collections of problems, those in class P and those in class NP, are the same or different. (It can be proven that every problem in P is also in NP, in other words, if it can be solved at a polynomial rate, then the proposed solution can also be checked at the same rate.) At the beginning of the third millennium the Clay Mathematics Institute in Providence, Rhode Island, published seven unsolved problems in mathematics and offered a million dollars for the solution of each one. The question whether P = NP is one of those questions. sudoku, for example, is in the NP class. If you can prove that every algorithm for solving

N

×

N

sudoku, with

N

rising necessarily, requires a number of steps that depends exponentially on

N

, you will receive a million dollars (provided you do so before anyone else does).

The discussion of P and NP classes is just one example of the many questions that the mathematics of computation deals with. For example, computability experts showed that if you present a polynomial algorithm to a sudoku problem, then for every problem in the NP class there is a polynomial algorithm (in particular, if you do that you will have solved the problem of the equality of P and NP, and you will receive a million dollars). Such problems, the polynomial solution to one of which implies that every problem in the NP class has a polynomial solution, are called NP-complete. Research in computability has so far identified a very large number of NP-complete problems.

The research is also involved in seeking efficient solutions to specific problems, or in proving that there are no efficient solutions to other specific problems. For instance, just a few years ago, in 2002, a polynomial algorithm was presented for checking whether a given number is prime. We will expand on this question in the next section. Another question that has far-reaching implications that we will discuss in section 55 is whether there is an efficient algorithm for finding the prime factors of a number that is the product of two prime numbers. The efficiency characteristic becomes even more precise, for instance, in identifying problems whose solutions require a number of elementary operations that are not larger than a linear expression of the size of the input, that is, an expression of the

type

aN

for a given number

a

, or an expression that is a product of the type

N

ln(

N

), that is, linear in

N

multiplied by the logarithm of

N

. The latter is a slower rate than quadratic, and hence preferable. Hundreds of problems have been examined and the degree of efficiency of their possible solutions has been identified. Many problems are still unsolved; in other words, the rate of increase in the number of steps required to solve them has not yet been identified.

The question arises whether the abstract discussion of the number of steps required to solve a problem with an input of size

N

with

N

increasing is relevant to finding a solution to real problems that interest the users of the computer. No one in his right mind intends to try to solve a sudoku of size one million by one million, let alone of size

N

, as

N

tends to infinity. The answer is surprising. There is no logical argument that relates the rate of increase in the number of steps required as

N

tends to infinity to the number of steps required to solve real problems. Moreover, there are problems in class P that theoretically can be solved efficiently but in practice cannot be solved by today's computers in a reasonable time. Nevertheless, many years of experience have shown that, in general, intuition gained from questions with a parameter that tends to infinity also applies to versions with a large but finite parameter.

This is an appropriate point to discuss the difficulty and validity of intuition rooted in various algorithms and in the way computers work. As an algorithm is a series of instructions, it is easy for the human brain to understand, implement, and even develop intuition about how the algorithm works. Associative thinking, which is the basis of the human thought process, is founded on the approach of deriving one thing from another, a process analogous to an algorithm. The same applies to the computer. It is easy for very young children and youngsters to master the handling of a computer, and they even relate to it intuitively. Not infrequently, if you ask for help related to the working of your computer from someone with computer experience (not necessarily an expert), the answer will be, “Let me sit at the keyboard and I'll find out what to do.” Sitting at the keyboard activates the associative reactions, the intuition. It is often assumed

that this is age-related. I do not think it is. To use the necessary intuition apparently requires you to overcome the psychological barrier, a barrier of fear perhaps, regarding the new machine, to operate it, which seems superficially to be an impossibility. Adults are challenged by this barrier, not youngsters who are growing up in proximity to computers. Nevertheless, at least two difficulties along the route to an intuitive understanding of the computer should be acknowledged. First, as mentioned, the incomprehensible speed of the computer clashes with human intuition, the latter of which developed in an environment where changes occur much more slowly (although it will be easier for the generation born in the computer age to develop such intuition). Second, the computer itself does not “think” associatively. The computer, at least the computer of today, does not think the way humans think. It carries out very precise instructions, coded in the software, and sometimes logical operations are incorporated in the software instructions. To develop intuition about logical arguments is a difficult or even impossible task. Which is why one will come across reactions from even the most experienced experts such as, “I don't know what the computer wants.”

54. PROOFS WITH HIGH PROBABILITY

The title of this section may sound like an oxymoron. Since the Greeks laid the logical foundations of mathematics there has been a deep-rooted consensus that the proof of a mathematical contention is either right or wrong. The title refers to a proof that a mathematical claim or proposition is correct with a certain probability. This does not mean subjective probability reflecting the degree of faith in the correctness of the proof, but rather an absolute probability that can be calculated. This is a new element in the logic of mathematical proofs, an element umbilically related to the possibilities that the computer opens up before us.

In practice, people encounter proofs with certain probabilities routinely in their daily lives. The idea of changing from an absolute precise examination of a claim to a statistical examination has always been inbred

in humans. The stallholder selling apples at the market assures you that all the apples are fresh and perfect. It is impractical to check every single apple, so you will check a few apples, and if you find that the ones you check are all fresh and perfect you will be convinced that the seller's claim is correct, or at least you will consider it highly probable that his claim is correct. It is important that the apples you choose to check are chosen at random, so the seller cannot trick you. The higher you want the probability to be (that his quality claim is correct), the greater the number of apples you must check. If you want to confirm his claim with mathematical certainty, you would have to check all the apples on the stall. Customs officials wanting to satisfy themselves that the contents of a container at the port are as stated on the shipping documents, or Ministry of Health inspectors trying to establish whether the shipment of pharmaceuticals meets the requisite standards, must for reasons of efficiency settle for examining only a sample.

The innovation in proofs with a high probability lies in their mathematical content. Why then would mathematicians agree to detract from the apparent purity of absolute proof? The answer lies in the efficiency obtained by accepting high probability proofs, efficiency in the sense we defined in the previous section. Even in mathematics it is sometimes possible to prove a claim correct efficiently if it is acceptable that the proof is correct only with a high degree of probability. We will now illustrate this and then present a derived concept, and that is how to convince someone that a proposition is correct without divulging anything of the proof itself.

The example relates to prime numbers. We have seen that these numbers played a central role in mathematics throughout the ages and continue to do so in modern mathematics and its uses. Mathematicians have always been seeking efficient ways of checking whether a given number is prime. No efficient way was discovered, however. The naive checks, from the sieve of Eratosthenes described above to more sophisticated checks, require an exponential number of steps as a function of the number being examined so that it is not practical to use these methods to check a number consisting of several hundred digits. In the terms introduced in the previous section, checking the primeness of numbers was not efficient.

In 2002 there was a theoretical leap regarding the efficiency of checking whether a number is prime. The scientists Neeraj Kayal, Nitin Saxena, and Manindra Agarwal of the Indian Institute of Technology, Kanpur, presented a polynomial method of checking whether numbers are prime. The method is known as KSA, after its discoverers. According to the theoretical definition, KSA is efficient, but the algorithm proposed in the system is far from practical. The number of steps used by the algorithm was given by a polynomial of power 8. Since then the system has been refined, and the power is now 6. This is still too high to make the system practical for everyday purposes. Thus, still today, no practical way of checking definitively whether a number is prime has been found.