Oracle Essentials Oracle Database 11g (56 page)

Read Oracle Essentials Oracle Database 11g Online

Authors: Rick Greenwald

288

|

Chapter 11: Oracle and High Availability

Export/Import, Standby Database, or Replication?

All the choices we’ve discussed in this chapter offer you some type of protection against losing critical data—or your entire database. But which one is right for your needs?

To quote the standard answer to so many technical questions, “it depends.” Export/

import, whether in its original form or in the Oracle Data Pump, provides a simple and proven method, but the time lag involved with this method typically leaves larger time periods where data is lost in the event of a failure. Transportable tablespaces can provide the same functionality with better performance, but are less granular. A physical standby database typically leaves smaller data gaps or, in the case since introduction of Oracle9

i

zero-data loss, no data gap; however, this solution does require the expense of redundant hardware. More recent database releases somewhat mitigate this as the standby database can be used for queries. Streams replication also requires redundant hardware and ensures consistent and complete data on both the primary and backup server, but this solution is the most resource-intensive of the three.

You should carefully balance the cost, both in extra hardware and performance, of each of these solutions, and balance them against the potential cost of a database or server failure. Of course, any one of these solutions is infinitely more valuable than not implementing any of them and simply hoping that a disaster never happens to you.

Rolling Upgrades

Thus far, we have focused on preventing unplanned downtime. But much of the availability planning in the past included defining planned downtime for system maintenance operations. Today, such downtime has largely disappeared with Oracle’s extensive online management capabilities. For example, Oracle provides online reorganization capabilities in recent releases, a task that often required extensive planning in the past.

The one remaining area that posed an availability challenge until recently is the need to perform upgrades. Today, where RAC configurations leverage ASM, rolling upgrades (introduced in Oracle Database 10

g

Release 2) are entirely feasible with no downtime. Among the tasks that can be accomplished are system and hardware upgrades, operating system upgrades, patching, and storage migration.

Other Oracle features can minimize downtime for non-RAC or ASM configurations.

Data Guard can be used to minimize downtime for system, database, and patch set upgrades. Transportable tablespaces and Oracle Streams are useful in speeding database upgrades and platform migrations.

Rolling Upgrades

|

289

Chapter 12

CHAPTER 12

Oracle and Hardware Architecture

12

In

Chapter 2,

we discussed the architecture of the Oracle database, and in

Chapter 7,

we described how Oracle uses hardware resources. How hardware architectures are chosen and deployed can ultimately determine the specific scalability, performance tuning, management, and reliability options available to you. In fact, systems are sometimes badly configured without consideration of the proper balance of CPUs, memory, and I/O for projected workloads. This can limit options for database tuning if performance later becomes an issue.

Over the years, Oracle has developed new features to address specific platforms and, with Oracle Database 11

g

, continues this process by building on a commitment to grid computing and information appliance-like configurations. This chapter discusses the various hardware architectures to provide a basis for understanding how Oracle leverages each of these. It covers the following types of hardware systems and how Oracle takes advantage of the features inherent in each of the platforms:

• Uniprocessors (including multicores)

• Symmetric Multiprocessing (SMP) systems

• Clusters

• Non-Uniform Memory Access (NUMA) systems

• Grid computing

We’ll also discuss the use of different disk technologies and how to choose the hardware system that’s most appropriate for your purposes.

System Basics

Any discussion of hardware systems begins with a review of the components that make up a hardware platform and the impact these components have on the overall system. You’ll find the same essential components under the covers of any computer system:

290

• One or more CPUs, which execute the basic instructions that make up computer programs, possibly with multiple cores to provide added processing power

• Memory, storing recently accessed instructions and data

• An input/output (I/O) system, that typically consists of some combination of disk storage, device controllers for pulling data and programs off physical media, and network controllers for connecting the system to other systems on the network

The number of each of these components and the capabilities of the individual components themselves determine the ultimate cost and scalability of a system. A machine with four processors is typically more expensive and capable of doing more work than a single-processor machine; new versions of components, such as CPUs, are typically faster and often less expensive than older versions.

Online transaction processing (OLTP) systems are most often designed for throughput. In business intelligence or data warehousing systems, it is often assumed that CPU and memory are the performance-limiting components. However, CPU processing power and memory capacity constraints have greatly risen in recent years (especially in the time period since we wrote earlier editions of this book), and providing adequate I/O now deserves special attention for these systems as well.

Each system component has a time to access and transport data, or a

latency cost

.

The latency cost of a component is the amount of latency the use of that component introduces into the system; in other words, how much slower each successive level of a component is than its previous level (e.g., Level 2 versus Level 1; see

Table 12-1). Each component also has limited capacity.

The CPU and the Level 1 (L1) memory cache on the CPU have the lowest latency, as shown in

Table 12-1,

but also the least capacity. Disk has the most capacity but the highest latency.

There are several different types of memory: an L1 cache, which is on the CPU chip; an L2 (Level 2) cache on the CPU surface, an L3 cache on the same board as the CPU; and main memory, which is the

remaining memory on the system accessible through the memory bus.

Table 12-1. Typical sizes and latencies of system components

Element

Typical storage capability

Typical latency

CPU

None

None

L1 cache (on CPU)

10s to 100s of KBs

10 nanoseconds

L2 cache (on CPU surface)

Single MBs

40–60 nanoseconds

L3 cache (on same board)

10s of MBs

120 nanoseconds

Main memory

MBs to TB+

1,000 - 10,000 nanoseconds

Disk

GBs to hundreds TBs

1-10 million nanoseconds

System Basics

|

291

An important part of tuning any Oracle database involves reducing the need to read data from sources with the greatest latency (e.g., disk) and, when a disk must be accessed, ensuring that there are as few bottlenecks as possible in the I/O subsystem.

As the Oracle database accesses a greater percentage of its data from memory rather than disk, the overall latency of the system is correspondingly decreased and perfor-

mance increases. For more information about tuning concepts, see Chapter 7.

Uniprocessor Systems

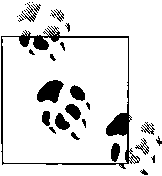

Uniprocessor systems, such as the one shown in

Figure 12-1,

are the simplest systems in terms of architecture. Each of these systems (typically a standard personal computer) contains a single CPU and a single I/O channel and is made entirely with industry-standard components. They are most often used as single-user machines (for example, for database development or providing browser access over a network). Some uniprocessor machines are also used as small servers for databases, especially where multicore processors are installed.

CPU with L1 Cache

L2 Cache

Memory

I/O

Disk

Figure 12-1. Typical uniprocessor system

Until the 1990s, uniprocessor systems were frequently used as servers because of their low price and the limited ability of relational databases to fully utilize other types of systems. However, Oracle evolved to take advantage of systems containing multiple CPUs through improved parallelism and more sophisticated optimization.

At the same time, the price points of Symmetric Multiprocessing systems (described in the next section) have plummeted dramatically, making SMP systems the database hardware servers of choice.

292

|

Chapter 12: Oracle and Hardware Architecture

Even in a uniprocessor system, the server operating systems used by these systems support multiple threads. The multicore processors are becoming common to further enable simultaneous processing of multiple tasks. Multicore processors are integrated circuits that contain two or more processors. Hardware platform vendors are racing to provide more cores to differentiate their platforms.

Each thread in a server operating system can be used to support a concurrent process, which can execute in parallel. By default, the PARALLEL_THREADS_PER_

CPU parameter in the initialization file is set at 2 for most platforms on which Oracle runs. Oracle can further determine the degree of parallelism based on parameters set in the initialization file or using the adaptive degree of parallelism feature, described in

Chapter 7.

This adaptive multiuser feature makes use of algorithms that take into account the number of threads. Additional tuning parameters can also affect parallelism, although the need for tuning of such parameters is much diminished in recent Oracle releases.

Symmetric Multiprocessing Systems

One of the early limiting factors for a uniprocessor system was the ultimate speed of its processor—all applications have to share this one resource. Symmetric Multiprocessing (SMP) systems were invented in an effort to overcome this limitation by

adding CPUs to the memory bus, as shown in Figure 12-2.

CPU with L1 Cache

CPU with L1 Cache

L2 Cache

L2 Cache

Memory

I/O

Disk

Figure 12-2. Typical Symmetric Multiprocessing (SMP) system

Symmetric Multiprocessing Systems

|

293

Each CPU has its own memory cache. Data resident in the cache of one CPU is sometimes needed for processing by a second CPU. Because of this potential sharing of data, the CPUs for such machines must be able to “snoop” the memory bus to determine where copies of data reside and whether the data is being updated. This snooping is managed transparently by the operating system that controls the SMP

system. Oracle Standard Edition One, Standard Edition, or Enterprise Edition can be used on these platforms. (Oracle limits the number of CPUs you can deploy using Standard Edition One and Standard Edition while placing no limit on the number of CPUs for Enterprise Edition.)

SMP platforms have been available since the 1980s as midrange platforms, primarily as Unix-based machines. Today, there is a category of entry-level servers featuring mostly 64-bit CPUs (replacing previous-generation 32-bit CPUs). The most popular operating systems in this category are Windows variations and Linux.

SMP servers that can scale to larger sizes from platform vendors such as HP, IBM, and Sun feature variations on this basic design. For example, SMP systems might include multicore CPUs, a larger L2 cache, faster memory bus and/or multiple higher-speed I/O channels. Each enhancement is intended to remove potential bottlenecks that can limit performance. Unix and Linux are the most common operating systems used in Oracle implementations on high-end SMP servers.

The number of CPUs possible in a SMP system is limited by scalability of the system (memory) bus. As more CPUs are added to the bus, the bus itself can become satu-rated with traffic between CPUs attached to the bus.

Systems featuring 64-bit CPUs can handle large amounts of data more efficiently than previous 32-bit CPUs; they support dozens of CPUs on a single system with hundreds of gigabytes of memory.

Of course, the database must have parallelization features to take full advantage of the SMP architecture. Oracle operations such as query execution and other DML activity and data loading can run as parallel processes within the Oracle server, allowing Oracle to take advantage of the benefits of multiprocessor systems. Oracle, like all software systems, benefits from parallel operations, as shown by “Amdahl’s Law.” Total execution time = (parallel part / number of processors) + serial part Amdahl’s Law, formulated by mainframe pioneer Gene Amdahl in 1967 to describe performance in mixed parallel and serial workloads, clearly shows that moving an operation from the serial portion of execution to a parallel portion provides the performance increases expected with the use of multiple processors. In the same way, the more serial operations that make up an application, the longer the execution time will be because the sum of the execution time of all serial operations can offset any performance gains realized from the use of multiple processors. In other words, you cannot speed up a serial operation or a sequence of serial operations by adding more processors.