Oracle Essentials Oracle Database 11g (23 page)

Read Oracle Essentials Oracle Database 11g Online

Authors: Rick Greenwald

Youcan write a trigger directly in PL/SQL or Java, or a trigger can call an existing stored procedure written in either language.

Triggers are fired as a result of a SQL statement that affects a row in a particular table. It’s possible for the actions of the trigger to modify the data in the table or to cause changes in other tables that fire their own triggers. The end result of this may be data that ends up being changed in a way that Oracle thinks is logically illegal.

These situations can cause Oracle to return runtime errors referring to

mutating

tables

, which are tables modified by other triggers, or

constraining tables

, which are tables modified by other constraints. Oracle8

i

eliminated some of the errors caused by activating constraints with triggers.

Oracle8

i

also introduced a very useful set of system event triggers (sometimes called

database-level event triggers

), and user event triggers (sometimes called

schema-level

event triggers

). For example, youcan place a trigger on system events such as database startup and shutdown and on user events such as logging on and logging off.

Query Optimization

All of the data structures discussed so far in this chapter are server entities. Users request data from an Oracle server through database queries. Oracle’s query optimizer must then determine the best way to access the data requested by each query.

108

|

Chapter 4: Oracle Data Structures

One of the great virtues of a relational database is its ability to access data without predefining the access paths to the data. When a SQL query is submitted to an Oracle database, Oracle must decide how to access the data. The process of making this decision is called

query optimization

, because Oracle looks for the optimal way to retrieve the data. This retrieval is known as the

execution path

. The trick behind query optimization is to choose the most efficient way to get the data, since there may be many different options available.

For instance, even with a query that involves only a single table, Oracle can take either of these approaches:

• Use an index to find the ROWIDs of the requested rows and then retrieve those rows from the table.

• Scan the table to find and retrieve the rows; this is referred to as a

full table scan.

Although it’s usually much faster to retrieve data using an index, the process of getting the values from the index involves an additional I/O step in processing the query. Query optimization may be as simple as determining whether the query involves selection conditions that can be imposed on values in the index. Using the index values to select the desired rows involves less I/O and is therefore more efficient than retrieving all the data from the table and then imposing the selection conditions.

Another factor in determining the optimal query execution plan is whether there is an ORDER BY condition in the query that can be automatically implemented by the presorted index. Alternatively, if the table is small enough, the optimizer may decide to simply read all the blocks of the table and bypass the index since it estimates the cost of the index I/O plus the table I/O to be higher than just the table I/O.

The query optimizer has to make some key decisions even with a query on a single table. When a more involved query is submitted, such as one involving many tables that must be joined together efficiently or one that has complex selection criteria and multiple levels of sorting, the query optimizer has a much more complex task.

Prior to Oracle Database 10

g

, you could choose between two different Oracle query optimizers, a

rule-based optimizer

and a

cost-based optimizer

; these are described in the following sections. With Oracle Database 10

g

, the rule-based optimizer is desupported. The references to syntax and operations for the rule-based optimizer in the following sections are provided for reference and are applicable only if youare running an older release of Oracle.

Rule-Based Optimization

Oracle has always had a query optimizer, but until Oracle7 the optimizer was only rule-based. The rule-based optimizer, as the name implies, uses a set of predefined rules as the main determinant of query optimization decisions. Since the rule-based

Query Optimization

|

109

optimizer has been desupported as of Oracle Database 10

g

, your interest in this topic is likely be limited to supporting old Oracle databases where this choice may have been made.

Rule-based optimization sometimes provided better performance than the early versions of Oracle’s cost-based optimizer for specific situations. The rule-based optimizer had several weaknesses, including offering only a simplistic set of rules. The Oracle rule-based optimizer had about 20 rules and assigned a weight to each one of them. In a complex database, a query can easily involve several tables, each with several indexes and complex selection conditions and ordering. This complexity means that there were a lot of options, and the simple set of rules used by the rule-based optimizer might not differentiate the choices well enough to make the best choice.

The rule-based optimizer assigned an optimization score to each potential execution path and then took the path with the best optimization score. Another weakness in the rule-based optimizer was resolution of optimization choices made in the event of a “tie” score. When two paths presented the same optimization score, the rule-based optimizer looked to the syntax of the SQL statement to resolve the tie. The winning execution path was based on the order in which the tables occur in the SQL

statement.

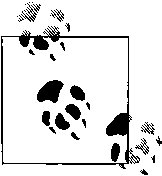

Youcan understand the potential impact of this type of tie-breaker by looking at a simple situation in which a small table with 10 rows, SMALLTAB, is joined to a large table with 10,000 rows, LARGETAB, as shown in

Figure 4-4.

If the optimizer chose to read SMALLTAB first, the Oracle database will read the 10 rows and then read LARGETAB to find the matching rows for each of the 10 rows. If the optimizer chose to read LARGETAB first, the database read 10,000 rows from LARGETAB and then read SMALLTAB 10,000 times to find the matching rows. Of course, the rows in SMALLTAB would probably be cached, reducing the impact of each probe, but you could see a dramatic difference in performance.

Differences like this could occur with the rule-based optimizer as a result of the ordering of the table names in the query. In the previous situation the rule-based optimizer returned the same results for the query, but it used widely varying amounts of resources to retrieve those results.

Cost-Based Optimization

To improve the optimization of SQL statements, Oracle introduced the

cost-based

optimizer

in Oracle7. As the name implies, the cost-based optimizer does more than simply look at a set of optimization rules; instead, it selects the execution path that requires the least number of logical I/O operations. This approach avoids the error

110

|

Chapter 4: Oracle Data Structures

1 logical I/O

per join

Total

SMALLTAB

20 logical I/Os

LARGETAB

10 logical I/Os

1 logical I/O

per join

Total

SMALLTAB

20,000 logical I/Os

LARGETAB

10,000 logical I/Os

Figure 4-4. The effect of optimization choices

discussed in the previous section. After all, the cost-based optimizer would know which table was bigger and would select the right table to begin the query, regardless of the syntax of the SQL statement.

Oracle8 and later versions, by default, use the cost-based optimizer to identify the optimal execution plan. And, since Oracle Database 10

g

, the cost-based optimizer is the only supported optimizer. To properly evaluate the cost of any particular execution plan, the cost-based optimizer uses statistics about the composition of the relevant data structures. These statistics are automatically gathered by default since the Oracle Database 10

g

release into the Automatic Workload Repository (AWR).

Among the statistics gathered in the AWR are database segment access and usage statistics, time model statistics, system and session statistics, SQL statements that produce the greatest loads, and Active Session History (ASH) statistics.

How statistics are used

The cost-based optimizer finds the optimal execution plan by assigning an optimization score for each of the potential execution plans using its own internal rules and logic along with statistics that reflect the state of the data structures in the database.

These statistics relate to the tables, columns, and indexes involved in the execution

plan. The statistics for each type of data structure are listed in Table 4-1.

Query Optimization

|

111

Table 4-1. Database statistics

Data structure

Type of statistics

Table

Number of rows

Number of blocks

Number of unused blocks

Average available free space per block

Number of chained rows

Average row length

Column

Number of distinct values per column

Second-lowest column value

Second-highest column value

Column density factor

Index

Depth of index B*-tree structure

Number of leaf blocks

Number of distinct values

Average number of leaf blocks per key

Average number of data blocks per key

Clustering factor

Oracle Database 10

g

and more current database releases also collect overall system statistics, including I/O and CPU performance and utilization. These statistics are stored in

the data dictionary, described in this chapter’s final section, “Data Dictionary Tables.”

You can see that these statistics can be used individually and in combination to determine the overall cost of the I/O required by an execution plan. The statistics reflect both the size of a table and the amount of unused space within the blocks; this space can, in turn, affect how many I/O operations are needed to retrieve rows. The index statistics reflect not only the depth and breadth of the index tree, but also the uniqueness of the values in the tree, which can affect the ease with which values can be selected using the index.

The accuracy of the cost-based optimizer depends on the accuracy of the statistics it uses, so updating statistics has always been a must.

Formerly, you would have used the SQL statement ANALYZE to compute or estimate these statistics. When managing an older release, many database administrators also used a built-in PL/SQL package, DBMS_STATS, that contains a number of procedures that helped automate the process of collecting statistics.

Stale statistics can lead to database performance problems, which is why database statistics gathering has been automated by Oracle. This statistics gathering can be quite granular. For example, as of Oracle Database 10

g

, youcan enable automatic statistics collection for a table, which can be based on whether a table is either stale (which means that more than 10 percent of the objects in the table have changed) or empty.

112

|

Chapter 4: Oracle Data Structures

The use of statistics makes it possible for the cost-based optimizer to make a much more well-informed choice of the optimal execution plan. For instance, the optimizer could be trying to decide between two indexes to use in an execution plan that involves a selection based on a value in either index. The rule-based optimizer might very well rate both indexes equally and resort to the order in which they appear in the WHERE clause to choose an execution plan. The cost-based optimizer, however, knows that one index contains 1,000 entries while the other contains 10,000

entries. It even knows that the index that contains 1,000 values contains only 20

unique values, while the index that contains 10,000 values has 5,000 unique values.

The selectivity offered by the larger index is much greater, so that index will be assigned a better optimization score and used for the query.

Testing the Effect of New Statistics

There may be times when you don’t want to update your statistics, such as when the distribution of data in your database has reached a steady state or when your queries are already performing optimally (or at least deliver adequate, consistent performance). Oracle gives youa way youcan try out a new set of statistics to see if they might make things better while still maintaining the option of returning to the old set: youcan save your statistics in a separate table and then collect new ones. If, after testing your application with these new statistics, you decide you preferred the way the old statistics worked, you can simply reload the saved statistics.