Surveillance or Security?: The Risks Posed by New Wiretapping Technologies (9 page)

Read Surveillance or Security?: The Risks Posed by New Wiretapping Technologies Online

Authors: Susan Landau

When Cerf and Kahn were initially developing TCP/IP, security was

about reliability and availability, not targeted attacks. Security was not

actually needed. Each computing company was using its own proprietary

protocols on their internal networks. This provided a modicum of security:

problems on one network could not easily propagate to become problems

on another.' This is an instance of security through obscurity, a method

of making attacks difficult by hiding how a system works. Security through

obscurity is not considered a wise way to proceed, because experience has

shown that the best method for finding a system's security flaws is through

public examination.' The adoption of the TCP/IP protocol by a wider audience occurred in several steps. First TCP/IP was the protocol designed for

transmission on the ARPANET. Then the National Science Foundation

(NSF) decided to build a network to link scientific researchers with supercomputer centers around the country. This proved to be the tipping point

for the Internet, though no one foresaw this at the time.

"We were building a research network for the U.S. research community,

and perhaps also for the research community in industry," explained

Dennis Jennings, who had been the program director for networking

within NSF's Office of Advanced Scientific Computing during the building

of NSFNET.6 "Our budget was limited. What every researcher wanted us to

do was build a network to his or her computer or workstation, but that

didn't scale." NSF proposed a three-level hierarchy of networks: campus

networks, regional networks, and a backbone network. This was a network

of networks, as it were. While individual researchers were not pleased about

connecting to a campus network, "interestingly campuses were thinking

about networks and they all seized on this [idea]. The stuff took off like

wildfire," said Jennings.

Only one protocol could link the networks in a way that allowed computers running different networking systems to communicate: Cerf and

Kahn's TCP/IP. Some objected: researchers running IBM mainframes

wanted to use the IBM networking protocol, while those running DEC VAX

sought the use of DEC's protocol, and so on. NSF prevailed and TCP/IP

was adopted.

No one envisioned that NSF's decision would lead to the Internet

becoming a national network. TCP/IP's utilization by the larger constituency did not prompt any work on improving the protocol's security.

DARPA's focus was on protecting communications on the Internet, rather

than on protecting the network infrastructure itself.'

The security services that preoccupied DARPA at the time were confidentiality, protecting communications against eavesdropping; integrity,

ensuring that communications had not been tampered with; and authenticity, ensuring that the sender being claimed is in fact the originator of

the message.

A principal user of encrypted communications was the Navy, which

found it far cheaper to use the Internet for communications between

Washington and Hawaii than to rent a leased encrypted line.

There was no focus on such attacks as spam, viruses, and the like.' Trust

was built in, in the sense that the network was a network for research and

education, and everyone was viewed as everyone else's friend or colleague.

This turned out to be a mistake. Or as retired NSA technical director Brian

Snow9 put it at a scientific meeting in 2007, "[With the Internet] there's

malice out there trying to get you. When you build a refrigerator, you have

to worry about random power surges. The problem is that [Internet] projects are designed assuming random malice rather than targeted attacks.i10

Security was simply not viewed as a serious problem for the new communications systems. No one anticipated needing to protect the network

against its users, and so no explicit mechanisms were built in to protect

the network. After all, attacks had never been a serious problem on the

telephone network. The fact that this did not carry over to the new

network was because of the differences I have discussed in the two communication networks, but this was not considered at the time.

Essentially the only devices that could be connected to the PSTN were

telephones. Because telephones are not multipurpose devices and cannot

be programmed to do other tasks, the only serious network attacks the

phone network suffered were "blue box" attacks: users, or devices, whistled

in the phone receiver at the correct frequency,11 tricking the network into

providing free long-distance calls.12 Signaling System 6 thwarts this through "out-of-band" signaling, in which the call-signaling information is transmitted through a different channel than the voice communication.

In contrast with telephones, computers are "smart" devices capable of

being programmed to do many interesting things. That tremendous benefit

can, however, be a problem when this malleability is turned against the

network itself. This was not something that the ARPANET designers

considered.

The PSTN designers opted to handle the problem that systems for data

transfer, whether human speech or file transfer over an electronic network,

are unreliable13 by building a system out of highly reliable components.

Early Internet architects went in the other direction and opted for reliability achieved through redundancy. TCP/IP assumes an unreliable data

delivery mechanism, IP, and then uses a reliable delivery mechanism, TCP,

on top of it. TCP has various mechanisms to ensure this reliability, including congestion control, managing the order of packets received to ensure

none are missed, and opening the connection in the first place. The latter

is worth discussing in some detail.

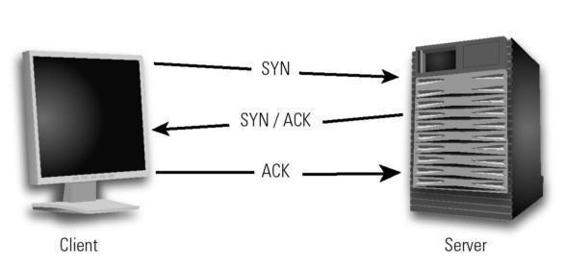

There is a handshake between the two connecting machines establishing their communication; there are messages that flow back and forth

establishing that packets have been received; and the two machines

measure the time elapsed between such acknowledgments, resending if

there has not been a timely response.

Suppose user Alice wants to view an article from Scientific American on

how magic fools the human brain.14 Her machine, the client, makes a

request to the computer hosting the Scientific American web page stating it

wants to establish a connection (figure 3.1). This is the synchronization

message, or SYN. The Scientific American server then responds with a synchronization acknowledgment: SYN ACK. If all is working correctly, Alice's

machine replies with an acknowledgment of its own, ACK, and the connection is established. The server downloads the Scientific American home

page onto Alice's client. The Scientific American home page contains more

information, and this step is actually a sequence of many small steps: a

large number of packets have to flow across the network from the Scientific

American server to Alice's machine.

The Scientific American server starts sending packets and Alice's machine

acknowledges receiving them. If the server does not receive packet acknowledgments within a fixed time window, the server resends the missing

packets. Both machines have timers operating; if appropriate acknowledgments are not received in a timely fashion (a matter of milliseconds), then

the packets (or request for packets) are automatically resent. Once the Scientific American server has received an acknowledgment that the last

packet has been received, it closes the connection to the client; that connection session is terminated.

Figure 3.1

Client/server interaction. Illustration by Nancy Snyder.

Now that Alice has received the web page at her machine, she starts to

search for the article she wants. Alice types in the appropriate keywords

(e.g., magic brain) in the search field on the Scientific American web page.

When she clicks on the "search" button on the web page, the connection

process starts afresh. Alice's client opens a connection with the Scientific

American server, sends it information ("Perform a search query on 'magic

brain"'), and the connection process begins (handshake, connection establishment), followed by packet exchange and then connection teardown.

Note that the Scientific American server did not "know" Alice before

establishing a connection with her machine. TCP does not require any

form of authentication of the user before connections are established. For

the research environment for which TCP was developed, this made good

sense. The network's purpose was sharing information and authentication

was an unnecessary complication that would have been difficult to implement. (By contrast, the phone company did care about authenticating the

call originator because that is who pays for the call.) Authentication would

also not easily scale. Requiring an introduction before a connection could

be made would have prevented the growth that the network experienced

between the early 1990s and the present.

The real point here is that while the Internet is a communications

network, it is a communications network that behaves nothing like the

telephone network. For some applications such as email, IM, and Voice

over IP conversations, an introduction prior to communication might

make sense. But many other applications function more like a store or library (a library with no requirement for signing out borrowed materials).

For those, an introduction is not only not valuable, it is actually disruptive.

Alice's browsing of the Scientific American website or her browsing of books

and their reviews at Amazon, do not-and should not-require an introduction before the connection is established. Even the first examples I

mentioned, email, IM, VoIP, would have difficulty with an introduction

prior to establishing a TCP connection because of Internet-enabled mobility. The IP address Alice's machine has today in the coffee shop is different

from the one it had yesterday at the airport, and it will be different again

tomorrow even if Alice frequents the same coffee shop (unless the coffee

shop has only one IP address available, an unlikely situation). Yet it is the

IP address that is the identifier in the TCP/IP protocol. By contrast, Alice's

mobile telephone has the same number15 regardless of whether she is in

Paris, Texas, or Paris, France.

In deciding to adopt TCP/IP for NSFNET, "Our ambition in 1985 was to

have all three-hundred-and-four research universities connected to NSFNET

by the end of 1986 or early 1987," said Dennis Jennings, who ran the NSF

program that built NSFNET. In that respect, the NSF succeeded spectacularly. "Had we any idea that this would be the network for the world, we

probably would have had to go to the PTTs [Public Telegraph and Telecommunications] or ISOs [International Standards Organizations]. Certainly

the PTTs would have designed a hierarchical system and would have built

in authentication."

Had that occurred, it is likely that the result would have been more

secure than the current Internet. It is also likely that the resulting network

would have lacked the openness and capability for innovation that have

made the Internet so remarkably fruitful. Jennings observed that "had

we known [what was to come], we'd have been terrified and the Internet

[would never have happened]." Jennings paused as he reflected on those

decisions made in the mid-1980s. "And we would have said, 'That's

not within scope; we're building a research network for a research

community .....

3.3 Cryptography to the Rescue?

For a long time people believed that once strong cryptography was available, the solution to Internet security would be at hand. While this is not

true-security is much more than simply encryption-it is the case that

cryptography is a basic tool for many Internet security problems. I will

take a brief detour to describe aspects of cryptography that play a role in Internet security; for learning the material in appropriate depth, the interested reader is urged to consult one of the large number of books on the

subject.

Cryptography, encoding messages so that only the intended recipient

can understand them, is nearly as old as written communication. A Mesopotamian scribe hid a formula for pottery glaze within cuneiform symbols,

while a Greek at the Persian court used steganography, or hiding a message

within another, to send a communication. The fourth century BCE Indian

political classic, the Arthasastra, urged cryptanalysis as a means of obtaining

intelligence. The Caesar cipher, used by Julius Caesar to communicate with

his generals, shifts each letter of the alphabet some number of letters "to

the right." Thus a Caesar shift of 3 would be: a - D, b - E, ... , y - B, z -> C.

Cryptography holds within itself an inherent contradiction: the system

must be made available to its users, yet widespread sharing of the system

increases the risk that the system will be compromised. The solution is to

minimize the secret part of the cryptosystem. A nineteenth-century cryptographer, Auguste Kerckhoffs, codified a basic tenet of cryptography: the

cryptosystem's security should rely upon the secrecy of the key-and not

upon the secrecy of the system's encryption algorithm.

The difficulty of breaking a secure system should roughly be the time

it takes for an exhaustive search of the keys. The Caesar cipher, with its

simple structure and simple key-if one can call the "3" of "shift three

letters to the right" the key-is easy to break. More sophisticated ciphers,

including transposition ciphers and more sophisticated substitution

ciphers, were developed in the fifteenth century. By the nineteenth century,

cryptography had become part of popular lore, turning up in such literature as "The Adventure of the Dancing Men" by Arthur Conan Doyle and

"The Gold Bug" by Edgar Allen Poe. Despite its long history, cryptography

was more a curiosity than a valuable tool. Radio and its transformation of

warfare made cryptography important.