Surveillance or Security?: The Risks Posed by New Wiretapping Technologies (5 page)

Read Surveillance or Security?: The Risks Posed by New Wiretapping Technologies Online

Authors: Susan Landau

Initially networks were quite local. The subscriber would ring the local

switch and tell the operator the name of the party with whom they wanted

to speak. The first switches were manual, consisting of panels with jacks

and cables between them. The operator would ring that party and then

connect the two lines on the switchboard via patch cords. While the original operators were teenage boys, their antics soon made clear that more

responsible people were needed, and young women became the telephone

operators of choice.' A Missouri undertaker designed the first automated

telephone switch."

We tend to think of a phone number as the name of the phone at

a particular location, but it is actually something else entirely. As Van

Jacobson, one of the early designers of Internet protocols, once put it, "A

phone number is not the name of your mom's phone; it's a program for

the end-office switch fabric to build a path to the destination line card.i11

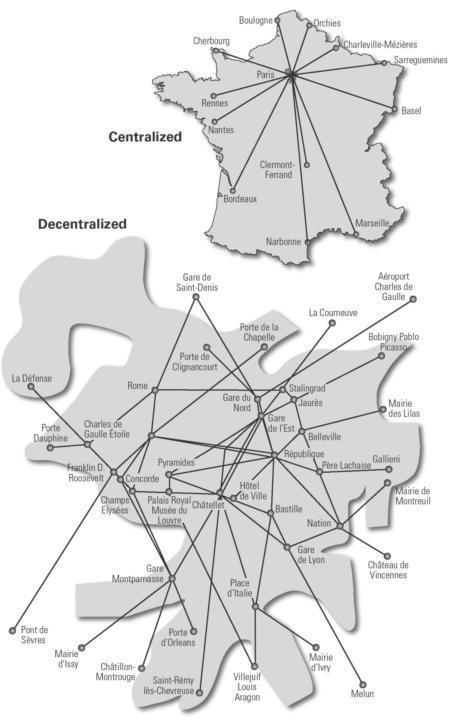

Figure 2.1 (opposite page)

Centralized versus decentralized networks. Illustration by Nancy Snyder.

Consider, for example, the U.S. telephone number: 212-930-0800. The

first three digits-the area code-establish the general area of the phone

number; in this case it is New York City. The next three digits, normally called the telephone exchange, represent a smaller geographic area.12 In

our example the last four digits are, indeed, the local exchange's name for

the phone. Taken as a whole, the set of ten digits constitute a route description; the switching equipment within the network interprets that information much like a program and uses it to form a connection.

The first thing a modern telephone-and I will start by describing just

landline phones-must do is signal that it is "off hook" and thus ready to

make a call. This happens when the receiver is lifted, which closes a circuit,

creating a dial tone and signaling the central office (the local phone

exchange). Then the subscriber can dial the phone number she wishes to

reach ("dial," of course, being an anachronism from the era of rotary telephones). When the central office receives this number, its job is to determine where to route the call.

If the call is local-that is, within the same area code-then the switches

at the central office need to determine which trunk line, or communication

channel, should be used to route the call to an appropriate intermediate

telephone exchange. This new exchange repeats the process, but this time

connects to the recipient's local exchange. Since the first three digits

denote the local exchange and are thus unnecessary, only the last four

digits of the number are transmitted. The local exchange determines if the

recipient's line is free; if so, it "rings" the line. If the recipient answers, her

receiver closes a circuit to the local exchange, which establishes the call.13

The speakers have a fixed circuit for the call, the one that was created

during the call setup.

This is, of course, a simplified example: the call did not use an area code,

let alone an international code. The other simplification is that the call

described above had only two "hops"-that is, it only went through two

telephone exchanges.

The key goal of the network design was to provide quality of voice

service. Engineers needed to factor in that each time a call goes through

an exchange, it needs to use a repeater to amplify the voice signal. Passing

through a repeater causes the signal to change slightly. Thus the network

needed to minimize the number of times a call would go through an

exchange. The telephone company limits calls to five hops, after which it

deems the degradation in voice quality unacceptable.14 Digital signals do

not face this problem and thus can travel through an arbitrary number of

repeaters. This small engineering difference leads to a remarkable freedom

in system design. Messages can traverse an arbitrarily long path15 to reach

a destination, enabling a more robust network.

The telephone system is built from highly reliable components. The

telephone company believed in service that allowed a user's calls to go through ninety-nine times out of a hundred. Since central office switches

served ten thousand lines, this meant "five 9s" reliability (otherwise the 1

percent blocking could not be satisfied). Of course, more than central office

switches are needed to service a call that travels between two destinations

with different central offices.

At the height of the Cold War, some engineers began thinking about

reliability differently. After all, you might care less about talking to a particular person at a particular moment than about getting the message

through eventually. That is, presuming the other party is in and willing to

answer the phone, you might not be concerned about always being able

to connect each time you dialed, but you might want to ensure that the

message you are attempting to send eventually gets through. This was the

problem that the designers of the Internet tried to solve.

2.2 Creating the Internet

In the 1960s, physicists had realized that the electromagnetic pulse from

a high-altitude nuclear explosion would disrupt, and quite possibly destroy,

electrical systems in a large area. Any centralized communication network

such as the phone system that the United States (and the rest of the world)

used would be in trouble." RAND researcher Paul Baran went to work on

this problem.

Since the frequency of AM radio stations would not be disrupted by the

blast, Baran realized the stations could be used to relay messages. He implemented this using a dozen radio stations." Meanwhile, using digital networks, Donald Davies of the United Kingdom's National Physical Laboratory

found another solution to the problem. Davies solved Baran's problem

while trying to address a completely different question.

Davies was interested in transmitting large data files across networked

computers.18 The problem is different from voice communications. Data

traffic is bursty: lots of data for a short time, then nothing, then lots again.

Dedicating a telephone circuit to a data transfer did not make a lot of sense;

the line would just not be used to its full extent19 and, unlike with voice,

a small delay is not a major issue in transmitting data files.

Both Baran and Davies hit upon the same solution. Redundancy in the

network-multiple distinct ways of going between the sender and the

recipient-was key. Such redundancy is surprisingly cheap to obtain. Say a

network has redundancy 1 if there are exactly enough wires connecting the

nodes so that there is one path between any two nodes; if there are twice

as many wires, call that redundancy 2, and so on.20 Trying experiments

on more traditional communications networks, Baran ran simulations and discovered that with a redundancy level of about 3, "The enemy could

destroy 50, 60, 70% of the targets or more and [the network] would still

work.i21 The design resulted in a highly robust system.

The distributed networks that Davies and Baran had independently

invented were to be even more decentralized than decentralized networks

of earlier efforts. Network redundancy meant that paths might be much

longer than the typical PSTN communication. Thus the communications

signal had to be digital, not analog. That turned out to be a tremendous

advantage. There was no need for the entire data transfer to occur in one

large message; indeed, efficiency and reliability argued that the message

should be split into small packets.

The idea was that when the packets were received the recipient's

machine sent a message back to the sender saying, "OK; got it." If there

was no acknowledgment, after a short period, the sender's machine would

resend the packet. Of course, because the packets traveled by varied routes,

they might arrive out of order. But the packets could be numbered, and

the receiving end could simply sort them back into order.

One of the striking things about this proposed network was that while

the network itself was to be extremely reliable, individual components

need not achieve that same level of reliability. Instead the network

depended on "structural reliability, rather than component reliability.""

Small amounts of redundancy led to vastly increased reliability, a result

surprising to the engineers.23

The Internet's decentralized control meant all machines on the network

were, more or less, peers. No one computer was in charge; the machines

were more or less equal and more or less capable of doing any of the

communication tasks. A computer could be the initiator or recipient of

a communication, or could simply pass a message through to a different

machine. This is the essence of a peer-to-peer network, and very much the

antithesis of the telephone company's hierarchical model of network

communication.

In Britain, the telecommunications establishment supported Davies,24

but in the United States Baran received a chilly reception from AT&T.

Baran was turning all the ideas that AT&T had used to manage their system

upside down. While scientists at the research arm of AT&T were quite

excited by Baran's work, corporate headquarters viewed that approbation

as the reaction of head-in-the-cloud scientists and refused to have anything

to do with Baran's packet-switched network.25 The odd thing about all this

was that the new network was not actually a new network at all. Baran

had built his network on top of the existing telephone network built by Alexander Graham Bell and his successors. It "hooked itself together as a

mesh,i26 simply connecting everything in new ways.

Scientific and technological ideas often emerge when the time is ripe,

and Baran and Davies were not the only ones to be considering packetswitched networks. (The actual term packet is due to Davies, who wanted

to convey the idea of a small package.) In 1961, Leonard Kleinrock, then

a graduate student at MIT, published the first of a series of papers analyzing

the mathematical behavior of messages traveling on one-way links in a

network. This analysis was critical for building a large-scale packet-switched

network.

One could say, only partially tongue in cheek, that the Internet is due

to Sputnik, the Soviet satellite that in 1957 startled the United States out

of its scientific complacency. In response the U.S. government founded the

Defense Advanced Research Projects Agency (DARPA), a Department of

Defense agency devoted to developing advanced technology for military

use.27 The Internet grew out of an ARPANET project and was perhaps the

most important civilian application that came from DARPA.

In 1966 DARPA hired MIT's Lawrence Roberts to build a network of

different computers that all communicated with one another.28 This would

be a resource-sharing network. Each individual system would follow its

own design with the only requirement being that the various networks be

able to "internetwork" with one another29 through the use of Interface

Message Processors (IMPs). Designing the IMPs fell to a Cambridge, Massachusetts, consulting company, Bolt Beranak and Newman (BBN), one of

whose researchers, Robert Kahn, moved to DARPA.

Kahn realized that only the IMPs would need a common language to

communicate, and this greatly simplified the entire scheme. The other

machines within the individual networks would not need to be transformed in any way in order to communicate with the rest of the system.

These principles made so much sense that forty years later they still govern

the Internet:

• Each individual network would stand on its own and would not need

internal changes in order to connect to the internetwork.

• Communications were on a "best-effort" basis. If a communication did

not go through, it would be retransmitted.

• The IMPs would connect the networks. These gateway machines (now

known as routers and switches) did not store information about the packets

that flowed through them, but simply directed the packets.

• All control would be local; there would be no centralized authority directing traffic.30 (This model is essentially opposite to the PSTN architecture of the time; there are many reasons for this difference, not all of them

technical.31)

With his colleague Vinton Cerf, Kahn developed the fundamental design

principles and communication protocols for the network that became the

Internet; they are the true "fathers of the Internet."

Kahn and Cerf developed the underlying protocol for end-to-end transmissions in 1973. It consisted of the Transmission Control Protocol (TCP)

and the Internet Protocol (IP) and is usually simply abbreviated as TCP/IP.

TCP determines whether a packet has reached its destination, while the IP

address is a numeric address that locates a device on a computer network.

Kahn and Cerf 's original version of IP used 32 bits for the address; this is

still in use today. As more and more devices connect to the Internet, the

32-bit address space, which allows for over four billion individual devices

to be located on the Internet, is running out of room.32 The new version

of addressing, IPv6 (Internet Protocol version 6), has 128 bits for addressing, which increases the number of possible Internet addresses by a factor

of 296, or more than a billion billion billion times as many.