The Information (41 page)

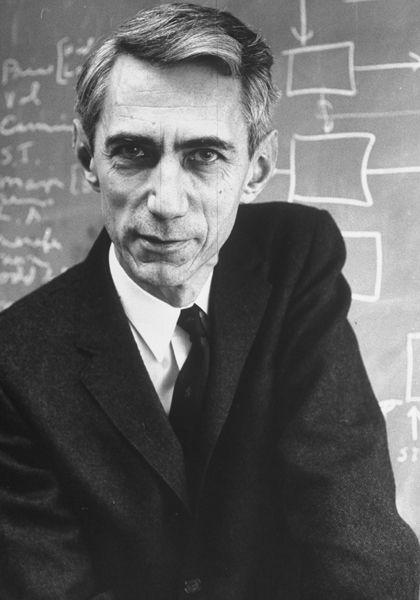

An influential counterpart of Broadbent’s in the United States was George Miller, who helped found the Center for Cognitive Studies at Harvard in 1960. He was already famous for a paper published in 1956 under the slightly whimsical title “The Magical Number Seven, Plus or Minus Two: Some Limits on Our Capacity for Processing Information.”

♦

Seven seemed to be the number of items that most people could hold in working memory at any one time: seven digits (the typical American telephone number of the time), seven words, or seven objects displayed by an experimental psychologist. The number also kept popping up, Miller claimed, in other sorts of experiments. Laboratory subjects were fed sips of water with different amounts of salt, to see how many different levels of saltiness they could discriminate. They were asked to detect differences between tones of varying pitch or loudness. They were shown random patterns of dots, flashed on a screen, and asked how many (below seven, they almost always knew; above seven, they almost always estimated). In one way and another, the number seven kept recurring as a threshold. “This number assumes a variety of disguises,” he wrote, “being sometimes a little larger and sometimes a little smaller than usual, but never changing so much as to be unrecognizable.”

Clearly this was a crude simplification of some kind; as Miller noted, people can identify any of thousands of faces or words and can memorize long sequences of symbols. To see what kind of simplification, he

turned to information theory, and especially to Shannon’s understanding of information as a selection among possible alternatives. “The observer is considered to be a communication channel,” he announced—a formulation sure to appall the behaviorists who dominated the profession. Information is being transmitted and stored—information about loudness, or saltiness, or number. He explained about bits:

One bit of information is the amount of information that we need to make a decision between two equally likely alternatives. If we must decide whether a man is less than six feet tall or more than six feet tall and if we know that the chances are 50-50, then we need one bit of information.…

Two bits of information enable us to decide among four equally likely alternatives. Three bits of information enable us to decide among eight equally likely alternatives … and so on. That is to say, if there are 32 equally likely alternatives, we must make five successive binary decisions, worth one bit each, before we know which alternative is correct. So the general rule is simple: every time the number of alternatives is increased by a factor of two, one bit of information is added.

The magical number seven is really just under three bits. Simple experiments measured discrimination, or channel capacity, in a single dimension; more complex measures arise from combinations of variables in multiple dimensions—for example, size, brightness, and hue. And people perform acts of what information theorists call “recoding,” grouping information into larger and larger chunks—for example, organizing telegraph dots and dashes into letters, letters into words, and words into phrases. By now Miller’s argument had become something in the nature of a manifesto. Recoding, he declared, “seems to me to be the very lifeblood of the thought processes.”

The concepts and measures provided by the theory of information provide a quantitative way of getting at some of these questions. The theory provides us with a yardstick for calibrating our stimulus materials and for measuring the performance of our subjects.… Informational concepts

have already proved valuable in the study of discrimination and of language; they promise a great deal in the study of learning and memory; and it has even been proposed that they can be useful in the study of concept formation. A lot of questions that seemed fruitless twenty or thirty years ago may now be worth another look.

This was the beginning of the movement called the cognitive revolution in psychology, and it laid the foundation for the discipline called cognitive science, combining psychology, computer science, and philosophy. Looking back, some philosophers have called this moment the informational turn. “Those who take the informational turn see information as the basic ingredient in building a mind,” writes Frederick Adams. “Information has to contribute to the origin of the mental.”

♦

As Miller himself liked to say, the mind came in on the back of the machine.

♦

Shannon was hardly a household name—he never did become famous to the general public—but he had gained an iconic stature in his own academic communities, and sometimes he gave popular talks about “information” at universities and museums. He would explain the basic ideas; puckishly quote Matthew 5:37, “Let your communication be, Yea, yea; Nay, nay: for whatsoever is more than these cometh of evil” as a template for the notions of bits and of redundant encoding; and speculate about the future of computers and automata. “Well, to conclude,” he said at the University of Pennsylvania, “I think that this present century in a sense will see a great upsurge and development of this whole information business; the business of collecting information and the business of transmitting it from one point to another, and perhaps most important of all, the business of processing it.”

♦

With psychologists, anthropologists, linguists, economists, and all sorts of social scientists climbing aboard the bandwagon of information theory, some mathematicians and engineers were uncomfortable.

Shannon himself called it a bandwagon. In 1956 he wrote a short warning notice—four paragraphs: “Our fellow scientists in many different fields, attracted by the fanfare and by the new avenues opened to scientific analysis, are using these ideas in their own problems.… Although this wave of popularity is certainly pleasant and exciting for those of us working in the field, it carries at the same time an element of danger.”

♦

Information theory was in its hard core a branch of mathematics, he reminded them. He, personally, did believe that its concepts would prove useful in other fields, but not everywhere, and not easily: “The establishing of such applications is not a trivial matter of translating words to a new domain, but rather the slow tedious process of hypothesis and experimental verification.” Furthermore, he felt the hard slogging had barely begun in “our own house.” He urged more research and less exposition.

As for cybernetics, the word began to fade. The Macy cyberneticians held their last meeting in 1953, at the Nassau Inn in Princeton; Wiener had fallen out with several of the group, who were barely speaking to him. Given the task of summing up, McCulloch sounded wistful. “Our consensus has never been unanimous,” he said. “Even had it been so, I see no reason why God should have agreed with us.”

♦

Throughout the 1950s, Shannon remained the intellectual leader of the field he had founded. His research produced dense, theorem-packed papers, pregnant with possibilities for development, laying foundations for broad fields of study. What Marshall McLuhan later called the “medium” was for Shannon the channel, and the channel was subject to rigorous mathematical treatment. The applications were immediate and the results fertile: broadcast channels and wiretap channels, noisy and noiseless channels, Gaussian channels, channels with input constraints and cost constraints, channels with feedback and channels with memory, multiuser channels and multiaccess channels. (When McLuhan announced that the medium was the message, he was being arch. The medium is both opposite to, and entwined with, the message.)

One of Shannon’s essential results, the noisy coding theorem, grew in importance, showing that error correction can effectively counter noise and corruption. At first this was just a tantalizing theoretical nicety; error correction requires computation, which was not yet cheap. But during the 1950s, work on error-correcting methods began to fulfill Shannon’s promise, and the need for them became apparent. One application was exploration of space with rockets and satellites; they needed to send messages very long distances with limited power. Coding theory became a crucial part of computer science, with error correction and data compression advancing side by side. Without it, modems, CDs, and digital television would not exist. For mathematicians interested in random processes, coding theorems are also measures of entropy.

Shannon, meanwhile, made other theoretical advances that planted seeds for future computer design. One discovery showed how to maximize flow through a network of many branches, where the network

could be a communication channel or a railroad or a power grid or water pipes. Another was aptly titled “Reliable Circuits Using Crummy Relays” (though this was changed for publication to “… Less Reliable Relays”).

♦

He studied switching functions, rate-distortion theory, and differential entropy. All this was invisible to the public, but the seismic tremors that came with the dawn of computing were felt widely, and Shannon was part of that, too.

As early as 1948 he completed the first paper on a problem that he said, “of course, is of no importance in itself”

♦

: how to program a machine to play chess. People had tried this before, beginning in the eighteenth and nineteenth centuries, when various chess automata toured Europe and were revealed every so often to have small humans hiding inside. In 1910 the Spanish mathematician and tinkerer Leonardo Torres y Quevedo built a real chess machine, entirely mechanical, called El Ajedrecista, that could play a simple three-piece endgame, king and rook against king.

Shannon now showed that computers performing numerical calculations could be made to play a full chess game. As he explained, these devices, “containing several thousand vacuum tubes, relays, and other elements,” retained numbers in “memory,” and a clever process of translation could make these numbers represent the squares and pieces of a chessboard. The principles he laid out have been employed in every chess program since. In these salad days of computing, many people immediately assumed that chess would be

solved:

fully known, in all its pathways and combinations. They thought a fast electronic computer would play perfect chess, just as they thought it would make reliable long-term weather forecasts. Shannon made a rough calculation, however, and suggested that the number of possible chess games was more than 10

120

—a number that dwarfs the age of the universe in nanoseconds. So computers cannot play chess by brute force; they must reason, as Shannon saw, along something like human lines.

He visited the American champion Edward Lasker in his apartment on East Twenty-third Street in New York, and Lasker offered suggestions for improvement.

♦

When

Scientific American

published a simplified

version of his paper in 1950, Shannon could not resist raising the question on everyone’s minds: “Does a chess-playing machine of this type ‘think’ ”

From a behavioristic point of view, the machine acts as though it were thinking. It has always been considered that skillful chess play requires the reasoning faculty. If we regard thinking as a property of external actions rather than internal method the machine is surely thinking.

Nonetheless, as of 1952 he estimated that it would take three programmers working six months to enable a large-scale computer to play even a tolerable amateur game. “The problem of a learning chess player is even farther in the future than a preprogrammed type. The methods which have been suggested are obviously extravagantly slow. The machine would wear out before winning a single game.”

♦

The point, though, was to look in as many directions as possible for what a general-purpose computer could do.

He was exercising his sense of whimsy, too. He designed and actually built a machine to do arithmetic with Roman numerals: for example, IV times XII equals XLVIII. He dubbed this THROBAC I, an acronym for Thrifty Roman-numeral Backward-looking Computer. He created a “mind-reading machine” meant to play the child’s guessing game of odds and evens. What all these flights of fancy had in common was an extension of algorithmic processes into new realms—the abstract mapping of ideas onto mathematical objects. Later, he wrote thousands of words on scientific aspects of juggling

♦

—with theorems and corollaries—and included from memory a quotation from E. E. Cummings: “Some son-of-a-bitch will invent a machine to measure Spring with.”

In the 1950s Shannon was also trying to design a machine that would repair itself.

♦

If a relay failed, the machine would locate and replace it. He speculated on the possibility of a machine that could reproduce itself, collecting parts from the environment and assembling them. Bell Labs

was happy for him to travel and give talks on such things, often demonstrating his maze-learning machine, but audiences were not universally delighted. The word “Frankenstein” was heard. “I wonder if you boys realize what you’re toying around with there,” wrote a newspaper columnist in Wyoming.