Through the Language Glass: Why the World Looks Different in Other Languages (39 page)

Read Through the Language Glass: Why the World Looks Different in Other Languages Online

Authors: Guy Deutscher

Tags: #Language Arts & Disciplines, #Linguistics, #Comparative linguistics, #General, #Historical linguistics, #Language and languages in literature, #Historical & Comparative

When the experiment was repeated under such conditions of verbal interference, the Russians no longer reacted more quickly to shades across the

siniy-goluboy

border, and their reaction time depended only on the objective distance between the shades. The results of the interference task point clearly at language as the culprit for the original differences in reaction time. Kay and Kempton’s original hunch that linguistic interference with the processing of color occurs on a deep and unconscious level has thus received strong support some two decades later. After all, in the Russian blues experiment, the task was a purely visual-motoric exercise, and language was never explicitly invited to the party. And yet somewhere in the chain of reactions between the photons touching the retina and the movement of the finger muscles, the categories of the mother tongue nevertheless got involved, and they speeded up the recognition of the color differences when the shades had different

names. The evidence from the Russian blues experiment thus gives more credence to the subjective reports of Kay and Kempton’s participants that shades with different names

looked

more distant to them.

An even more remarkable experiment to test how language meddles with the processing of visual color signals was devised by four researchers from Berkeley and Chicago—Aubrey Gilbert, Terry Regier, Paul Kay (same one), and Richard Ivry. The strangest thing about the setup of their experiment, which was published in 2006, was the unexpected number of languages it compared. Whereas the Russian blues experiment involved speakers of exactly two languages, and compared their responses to an area of the spectrum where the color categories of the two languages diverged, the Berkeley and Chicago experiment was different, because it compared . . . only English.

At first sight, an experiment involving speakers of only one language may seem a rather left-handed approach to testing whether the mother tongue makes a difference to speakers’ color perception. Difference from what? But in actual fact, this ingenious experiment was rather dexterous, or, to be more precise, it was just as adroit as it was a-gauche. For what the researchers set out to compare was nothing less than the left and right halves of the brain.

Their idea was simple, but like most other clever ideas, it appears simple only once someone has thought of it. They relied on two facts about the brain that have been known for a very long time. The first fact concerns the seat of language in the brain: for a century and a half now scientists have recognized that linguistic areas in the brain are not evenly divided between the two hemispheres. In 1861, the French surgeon Pierre Paul Broca exhibited before the Paris Society of Anthropology the brain of a man who had died on his ward the day before, after suffering from a debilitating brain disease. The man had lost his ability to speak years earlier but had maintained many other aspects of his intelligence. Broca’s autopsy showed that one particular area of the man’s brain had been completely destroyed: brain tissue in the frontal lobe of the left hemisphere had rotted away, leaving only a large cavity full of watery liquid. Broca concluded that this particular area of the left hemisphere must be the part of the brain responsible for articulate speech. In the following years, he and his colleagues conducted many more autopsies on people who had lost their ability to speak, and the same area of their brains turned out to be damaged. This proved beyond doubt that the particular section of the left hemisphere, which later came to be called “Broca’s area,” was the main seat of language in the brain.

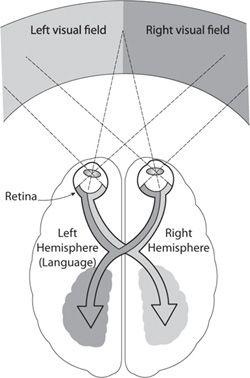

Processing of the left and right visual fields in the brain

The second well-known fact that the experiment relied on is that each hemisphere of the brain is responsible for processing visual signals from the opposite half of the field of vision. As shown in the illustration above, there is an X-shaped crossing over between the two halves of the visual field and the two brain hemispheres: signals from our left side are sent to the right hemisphere to be processed, whereas signals from the right visual field are processed in the left hemisphere.

If we put the two facts together—the seat of language in the left hemisphere and the crossover in the processing of visual information—it follows that visual signals from our right side are processed in the same half of the brain as language, whereas what we see on the left is processed in the hemisphere without a significant linguistic component.

The researchers used this asymmetry to check a hypothesis that seems incredible at first (and even second) sight: could the linguistic meddling affect the visual processing of color in the left hemisphere more strongly than in the right? Could it be that people perceive colors differently, depending on which side they see them on? Would English speakers, for instance, be more sensitive to shades near the green-blue border when they see these on their right-hand side rather than on the left?

To test this fanciful proposition, the researchers devised a simple odd-one-out task. The participants had to look at a computer screen and to focus on a little cross right in the middle, which ensured that whatever appeared on the left half of the screen was in their left visual field and vice versa. The participants were then shown a circle made out of little squares, as in the picture above (and in color in

figure 9

).

All the squares were of the same color except one. The participants were asked to press one of two buttons, depending on whether the odd square out was in the left half of the circle or in the right. In the picture above, the odd square out is roughly at eight o’clock, so the correct response would be to press the left button. The participants were given a

series of such tasks, and in each one the odd one out changed color and position. Sometimes it was blue whereas the others were green, sometimes it was green but a different shade from all the other greens, sometimes it was green but the others were blue, and so on. As the task is simple, the participants generally pressed the correct button. But what was actually being measured was the time it took them to respond.

As expected, the speed of recognizing the odd square out depended principally on the objective distance between the shades. Regardless of whether it appeared on the left or on the right, participants were always quicker to respond the farther the shade of the odd one out was from the rest. But the startling result was a significant difference between the reaction patterns in the right and in the left visual fields. When the odd square out appeared on the right side of the screen, the half that is processed in the same hemisphere as language, the border between green and blue made a real difference: the average reaction time was significantly shorter when the odd square out was across the green-blue border from the rest. But when the odd square out was on the left side of the screen, the effect of the green-blue border was far weaker. In other words, the speed of the response was much less influenced by whether the odd square out was across the green-blue border from the rest or whether it was a different shade of the same color.

So the left half of English speakers’ brains showed the same response toward the blue-green border that Russian speakers displayed toward the

siniy-goluboy

border, whereas the right hemisphere showed only weak traces of a skewing effect. The results of this experiment (as well as a series of subsequent adaptations that have corroborated its basic conclusions) leave little room for doubt that the color concepts of our mother tongue interfere directly in the processing of color. Short of actually scanning the brain, the two-hemisphere experiment provides the most direct evidence so far of the influence of language on visual perception.

Short of scanning the brain? A group of researchers from the University of Hong Kong saw no reason to fall short of that. In 2008, they published the results of a similar experiment, only with a little twist. As before, the recognition task involved staring at a computer screen,

recognizing colors, and pressing one of two buttons. The difference was that the doughty participants were asked to complete this task while lying in the tube of an MRI scanner. MRI, or magnetic resonance imaging, is a technique that produces online scans of the brain by measuring the level of blood flow in its different regions. Since increased blood flow corresponds to increased neural activity, the MRI scanner measures (albeit indirectly) the level of neural activity in any point of the brain.

In this experiment, the mother tongue of the participants was Mandarin Chinese. Six different colors were used: three of them (red, green, and blue) have common and simple names in Mandarin, while three other colors do not (see

figure 10

). The task was very simple: the participants were shown two squares on the screen for a split second, and all they had to do was indicate by pressing a button whether the two squares were identical in color or not.

The task did not involve language in any way. It was again a purely visual-motoric exercise. But the researchers wanted to see if language areas of the brain would nevertheless be activated. They assumed that linguistic circuits would more likely get involved with the visual task if the colors shown had common and simple names than if there were no obvious labels for them. And indeed, two specific small areas in the cerebral cortex of the left hemisphere were activated when the colors were from the easy-to-name group but remained inactive when the colors were from the difficult-to-name group.

To determine the function of these two left-hemisphere areas more accurately, the researchers administered a second task to the participants, this time explicitly language-related. The participants were shown colors on the screen, and while their brains were being scanned they were asked to say aloud what each color was called. The two areas that had been active earlier only with the easy-to-name colors now lit up as being heavily active. So the researchers concluded that the two specific areas in question must house the linguistic circuits responsible for finding color names.

If we project the function of these two areas back to the results of the first (purely visual) task, it becomes clear that when the brain has to decide whether two colors look the same or not, the circuits responsible

for visual perception ask the language circuits for help in making the decision, even if no speaking is involved. So for the first time, there is now direct neurophysiologic evidence that areas of the brain that are specifically responsible for name finding are involved with the processing of purely visual color information.