How to Read a Paper: The Basics of Evidence-Based Medicine (25 page)

Read How to Read a Paper: The Basics of Evidence-Based Medicine Online

Authors: Trisha Greenhalgh

In contrast, a

systematic review

is an overview of primary studies which:

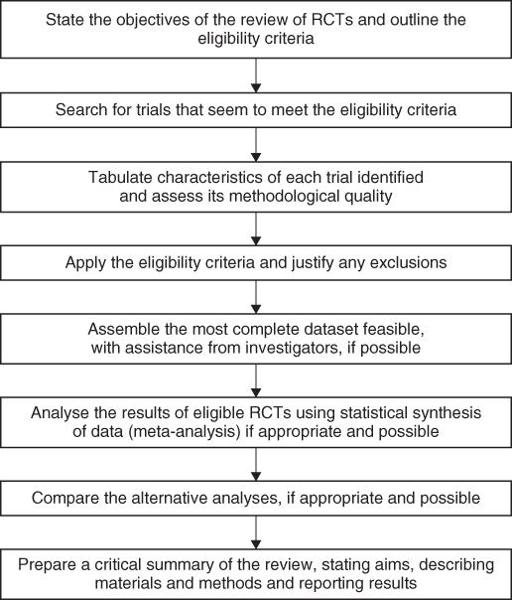

- contains a statement of objectives, sources and methods;

- has been conducted in a way that is explicit, transparent and reproducible (see

Figure 9.1

).

Figure 9.1

Method for a systematic review.

The most enduring and reliable systematic reviews, notably those undertaken by the Cochrane Collaboration (see section ‘Specialised resources’) are regularly updated to incorporate new evidence.

As my colleague Paul Knipschild observed some years ago, Nobel Prize winner Pauling [2] once published a review, based on selected referencing of the studies that supported his hypothesis, showing that vitamin C cured the common cold. A more objective analysis showed that whilst one of two did indeed suggest an effect, a true estimate based on

all

the available studies suggested that vitamin C had no effect at all on the course of the common cold. Pauling probably did not deliberately intend to deceive his readers, but because his enthusiasm for his espoused cause outweighed his scientific objectivity, he was unaware of the

selection bias

influencing his choice of papers. Evidence shows that if you or I were to attempt what Pauling did—that is, hunt through the medical literature for ‘evidence’ to support our pet theory—we would make an equally idiosyncratic and unscientific job of it [3]. Some advantages of the systematic review are given in Box 9.1.

Experts, who have been steeped in a subject for years and know what the answer ‘ought’ to be, were once shown to be significantly less able to produce an objective review of the literature in their subject than non-experts [4]. This would have been of little consequence if experts' opinion could be relied upon to be congruent with the results of independent systematic reviews, but at the time they most certainly couldn't [5]. These condemning studies are still widely quoted by people who would replace all subject experts (such as cardiologists) with search-and-appraisal experts (people who specialise in finding and criticising papers on any subject). But no one in more recent years has replicated the findings—in other words, perhaps we should credit today's experts with more of a tendency to base their recommendations on a thorough assessment of the evidence! As a general rule, if you want to seek out the best objective evidence of the benefits of (say) different anticoagulants in atrial fibrillation, you should ask someone who is an expert in systematic reviews to work alongside an expert in atrial fibrillation.

To be fair to Pauling [2], he did mention a number of trials whose results seriously challenged his theory that vitamin C prevents the common cold. But he described all such trials as ‘methodologically flawed’. So were many of the trials that Pauling

did

include in his analysis, but because their results were consistent with Pauling's views, he was, perhaps subconsciously, less critical of weaknesses in their design [6].

Box 9.1 Advantages of systematic reviews [2]

- Explicit methods

limit bias

in identifying and rejecting studies. - Conclusions are hence more

reliable

and

accurate

. - Large amounts of

information

can be assimilated quickly by health care providers, researchers and policymakers. - Delay between research discoveries and

implementation

of effective diagnostic and therapeutic strategies is reduced (see Chapter 12). - Results of different studies can be formally compared to establish

generalisability

of findings and

consistency

(lack of heterogeneity) of results (see section ‘Likelihood ratios’). - Reasons for

heterogeneity

(inconsistency in results across studies) can be identified and new hypotheses generated about particular subgroups (see section ‘Likelihood ratios’). - Quantitative systematic reviews (meta-analyses) increase the

precision

of the overall result (see sections ‘Were preliminary statistical questions addressed?’ and ‘Ten questions to ask about a paper that claims to validate a diagnostic or screening test’).

I mention this example to illustrate the point that, when undertaking a systematic review, not only must the search for relevant articles be thorough and objective but the criteria used to reject articles as ‘flawed’ must be explicit and independent of the results of those trials. In other words, you don't trash a trial because all other trials in this area showed something different (see section ‘Explaining heterogeneity’); you trash it because,

whatever the results showed

, the trial's objectives or methods did not meet your inclusion criteria or quality standard (see section ‘The science of “trashing” papers’).

Evaluating systematic reviews

One of the major developments in evidence-based medicine (EBM) since I wrote the first edition of this book in 1995 has been the agreement on a standard, structured format for writing up and presenting systematic reviews. The original version of this was called the

QUORUM statement

(equivalent to the CONSORT format for reporting randomised controlled trials discussed in section ‘Randomised controlled trials’). It was subsequently updated as the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement [7]. Following these structured checklists makes systematic reviews and meta-analyses a whole lot easier to find your way around. Here are some questions based on the PRISMA checklist (but greatly shortened and simplified) to ask about any systematic review of quantitative evidence.

Question One: What is the important clinical question that the review addressed?

Look back to Chapter 3, in which I explained the importance of defining the question when reading a paper about a clinical trial or other form of primary research. I called this

getting your bearings

because one sure way to be confused about a paper is to fail to ascertain what it is about. The definition of a specific answerable question is, if anything, even more important (and even more frequently omitted!) when preparing an overview of primary studies. If you have ever tried to pull together the findings of a dozen or more clinical papers into an essay, editorial or summary notes for an examination, you will know that it is all too easy to meander into aspects of the topic that you never intended to cover.

The question addressed by a systematic review needs to be defined very precisely, as the reviewer must make a dichotomous (yes/no) decision as to whether each potentially relevant paper will be included or, alternatively, rejected as ‘irrelevant’. The question, ‘do anticoagulants prevent strokes in patients with atrial fibrillation?’ sounds pretty specific, until you start looking through the list of possible studies to include. Does ‘atrial fibrillation’ include both rheumatic and non-rheumatic forms (which are known to be associated with very different risks of stroke), and does it include intermittent atrial fibrillation? My grandfather, for example, used to go into this arrhythmia for a few hours on the rare occasions when he drank coffee and would have counted as a ‘grey case’ in any trial.

Does ‘stroke’ include both ischaemic stroke (caused by a

blocked

blood vessel in the brain) and haemorrhagic stroke (caused by a

burst

blood vessel)? And, talking of burst blood vessels, shouldn't we be weighing the side effects of anticoagulants against their possible benefits? Does ‘anticoagulant’ mean the narrow sense of the term (i.e. drugs that work on the clotting cascade) such as heparin, warfarin and dabigatran, or does it also include drugs that reduce platelet stickiness, such as aspirin and clopidogrel? Finally, should the review cover trials on people who have already had a previous stroke or transient ischaemic attack (a mild stroke that gets better within 24 h), or should it be limited to trials on individuals without these major risk factors for a further stroke? The ‘simple’ question posed earlier is becoming unanswerable, and we must refine it in this manner.

To assess the effectiveness and safety of warfarin-type anticoagulant therapy in secondary prevention (i.e. following a previous stroke or transient ischaemic attack) in patients with all forms of atrial fibrillation: comparison with antiplatelet therapy

[8].

Question Two: Was a thorough search carried out of the appropriate database(s) and were other potentially important sources explored?

As

Figure 9.1

illustrates, one of the benefits of a systematic review is that, unlike a narrative or journalistic review, the author is required to tell you where the information in it came from and how it was processed. As I explained in Chapter 2, searching the Medline database for relevant articles is a sophisticated science, and even the best Medline search will miss important papers. The reviewer who seeks a comprehensive set of primary studies must approach the other databases listed in section ‘Primary studies—tackling the jungle’—and sometimes many more (e.g. in a systematic review of the diffusion of innovations in health service organisations, my colleagues and I searched a total of 15 databases, 9 of which I'd never even heard of when I started the study [9]).

In the search for trials to include in a review, the scrupulous avoidance of linguistic imperialism is a scientific as well as a political imperative. As much weight must be given, for example, to the expressions ‘Eine Placebo-kontrollierte Doppel-blindstudie’ and ‘une étude randomisée a double insu face au placebo’ as to ‘a double-blind, randomised controlled trial’ [6], although omission of other-language studies is not, generally, associated with biased results (it's just bad science) [10]. Furthermore, particularly where a statistical synthesis of results (meta-analysis) is contemplated, it may be necessary to write and ask the authors of the primary studies for data that were not originally included in the published review (see section ‘Meta-analysis for the non-statistician’).

Even when all this has been done, the systematic reviewer's search for material has hardly begun. As Knipschild and colleagues [6] showed when they searched for trials on vitamin C and cold prevention, their electronic databases only gave them 22 of their final total of 61 trials. Another 39 trials were uncovered by hand-searching the manual Index Medicus database (14 trials not identified previously), and searching the references of the trials identified in Medline (15 more trials), the references of the references (9 further trials), and the references of the references of the references (one additional trial not identified by any of the previous searches).

Do not be too hard on a reviewer, however, if he or she has not followed this counsel of perfection to the letter. After all, Knipschild and his team [6] found that only one of the trials not identified in Medline met stringent criteria for methodological quality and ultimately contributed to their systematic review of vitamin C in cold prevention. The use of more laborious search methods (such as pursuing the references of references, citation chaining writing to all the known experts in the field, and hunting out ‘grey literature’) (see Box 9.2 and also section ‘Primary studies—tackling the jungle’) may be of greater relative importance when looking at trials outside the medical mainstream. For example, in health service management, my own team showed that only around a quarter of relevant, high-quality papers were turned up by electronic searching [11].

Box 9.2 Checklist of data sources for a systematic review

- Medline database

- Cochrane controlled clinical trials register (see ‘Synthesised sources’, page 17)

- Other medical and paramedical databases (see the whole of Chapter 2, page 15)

- Foreign language literature

- ‘Grey literature’ (theses, internal reports, non-peer-reviewed journals, pharmaceutical industry files)

- References (and references of references, etc.) listed in primary sources

- Other unpublished sources known to experts in the field (seek by personal communication)

- Raw data from published trials (seek by personal communication)

Question Three: Was methodological quality assessed and the trials weighted accordingly?

Chapters 3 and 4 and Appendix 1 provide some checklists for assessing whether a paper should be rejected outright on methodological grounds. But given that only around 1% of clinical trials are said to be beyond criticism methodologically, the practical question is how to ensure that a ‘small but perfectly formed’ study is given the weight it deserves in relation to a larger study whose methods are adequate but more open to criticism. As the PRISMA statement emphasises, the key question is the extent to which the methodological flaws are likely to have

biased

the review's findings [7].